How Your AI Chats Are Ending Up on Google, And How LeakSnitch Can Stop It

"Private ChatGPT conversations, indexed and searchable on Google."

TL;DR: Your private AI conversations might not be as private as you think. Search engines are indexing shared ChatGPT links, and LeakSnitch can help you catch these leaks before they become public.

It happened quietly, the way many data breaches do — without alarms, without the drama of a Hollywood hack, just a slow seep of information from where it was trusted to where it was not.

Last week, a security researcher stumbled upon something unsettling: private ChatGPT conversations — containing personal confessions, sensitive corporate discussions, even raw API keys — showing up in Google search results. No break-ins, no stolen passwords, no criminal mastermind behind a screen; the data was simply there, sitting on public links, waiting for someone to find it.

The unsettling part isn't just that it happened, but how.

The AI Privacy Illusion

AI chat platforms have quickly become our modern confidants. We ask them things we wouldn't dare tell friends: late-night health worries, half-formed business ideas, the code that runs our companies' core systems.

In our minds, the digital chat is a private conversation, sealed away unless we choose otherwise. But that illusion shatters when a conversation is shared via a public link, when the platform neglects to block search engine crawlers, and when those crawlers (automated bots that never sleep) eagerly index every open page they can reach.

How Crawlers Turn Private Into Public

Search engines like Google operate on relentless curiosity, sending these crawlers across the web, following every link, collecting and cataloging everything in their path. If a chat platform leaves its doors open, the crawlers will happily walk in, read what's inside, and store it for the world to search.

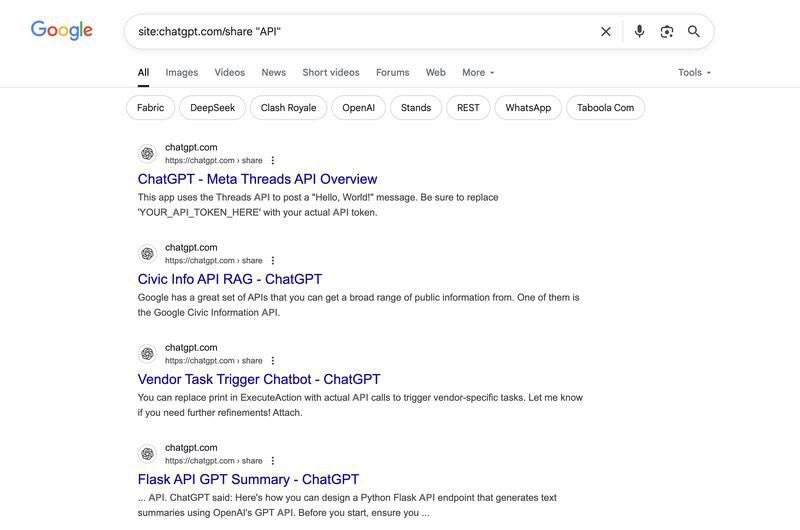

In the wrong hands, even the simplest "Google Dork" (an advanced search query) can reveal thousands of pages never meant to be public, including conversations once thought to be private.

Example Google Dork Query

"A simple Google Dork can uncover thousands of publicly exposed pages, including AI conversations."

Real-World Cases

The ChatGPT Leak

The ChatGPT leak was a prime example. The platform's shared conversation feature generated URLs that didn't require login protection, which meant that anything shared through those links could be read by anyone — and quickly indexed by search engines.

Within days, those pages appeared in public search results. The fallout was swift and damaging: exposed API keys ready for abuse, proprietary company information laid bare, and personal details like phone numbers and passport data available to anyone curious enough to look.

Samsung's Costly Lesson

This isn't a one-off incident, either. In 2023, Samsung engineers famously pasted proprietary code into ChatGPT while troubleshooting. This mistake didn't involve Google indexing, but it still prompted the company to ban ChatGPT entirely for its staff.

Both situations highlight the same truth: sometimes, leaks don't require sophisticated hacking at all. They happen because default settings are too open, or because users don't realize that "share" might mean "share with the whole internet."

Anatomy of a Leak

The path from private thought to public embarrassment is short. It begins with someone pasting sensitive information into a chat, continues when that conversation is shared via an unsecured link, accelerates when a crawler indexes it, and ends when a curious searcher stumbles across it.

From there, the leak can spread at the speed of copy-and-paste, becoming impossible to fully erase.

The Leak Path

"Leaks aren't always about hacking — sometimes, it's just bad defaults."

Where LeakSnitch Comes In

This is where LeakSnitch comes in. Think of it as a smart filter for your browser — an extension that actively monitors what you type or paste.

If it detects sensitive data like API keys, passwords, or confidential information, it instantly blocks the action or alerts you before it leaves your screen. Instead of finding leaks after they happen, LeakSnitch stops them at the source.

LeakSnitch Alert Dashboard

"LeakSnitch alerting users about sensitive data being exposed."

Example: LeakSnitch Saves a Dev Team

Imagine a dev team pasting a staging server URL into a ChatGPT conversation to share it internally. Hours later, a search engine crawler indexes it. Without monitoring, that server address could stay exposed for weeks.

With LeakSnitch, the team would get an alert almost immediately, giving them the chance to take the page down and request its removal from search results before bad actors noticed.

Why This Matters for Everyone

This problem isn't limited to big corporations. Freelancers can accidentally leak client information. Students can expose research data. Small businesses can inadvertently reveal customer records.

Once that data is indexed, it's like trying to pull ink out of water. Deletion requests may help, but the content may already be archived, scraped, or shared elsewhere.

The Bottom Line

The reality is that AI tools are powerful, but their safety rails aren't always in place, and they're not always obvious to the user. The best protection is a mix of good habits and active monitoring: avoid pasting sensitive data into AI chats unless you're sure they're private, think twice before generating share links, and keep an eye on what's publicly visible about you or your organization.

Tools like LeakSnitch make that last part far easier, ensuring that if something does slip out, you know about it before the wrong person does.

AI may feel like a trusted confidant, but it doesn't have the discretion of a human friend. It will store, share, and expose exactly what its settings allow, and sometimes what they fail to block.

In a world where search engines can turn a quiet mistake into a global leak, the difference between safety and exposure often comes down to how quickly you realize the mistake happened. LeakSnitch can't stop every slip-up, but it can make sure you're never the last to know.

Ready to Protect Your Data?

Protect your chats. Monitor your presence. Stay ahead of the crawlers.